How to Create Automatic Chapters in a Video Using Open Source Models

In this article, we will use open source models to build a multimodal pipeline for automatic chaptering in long-form videos.

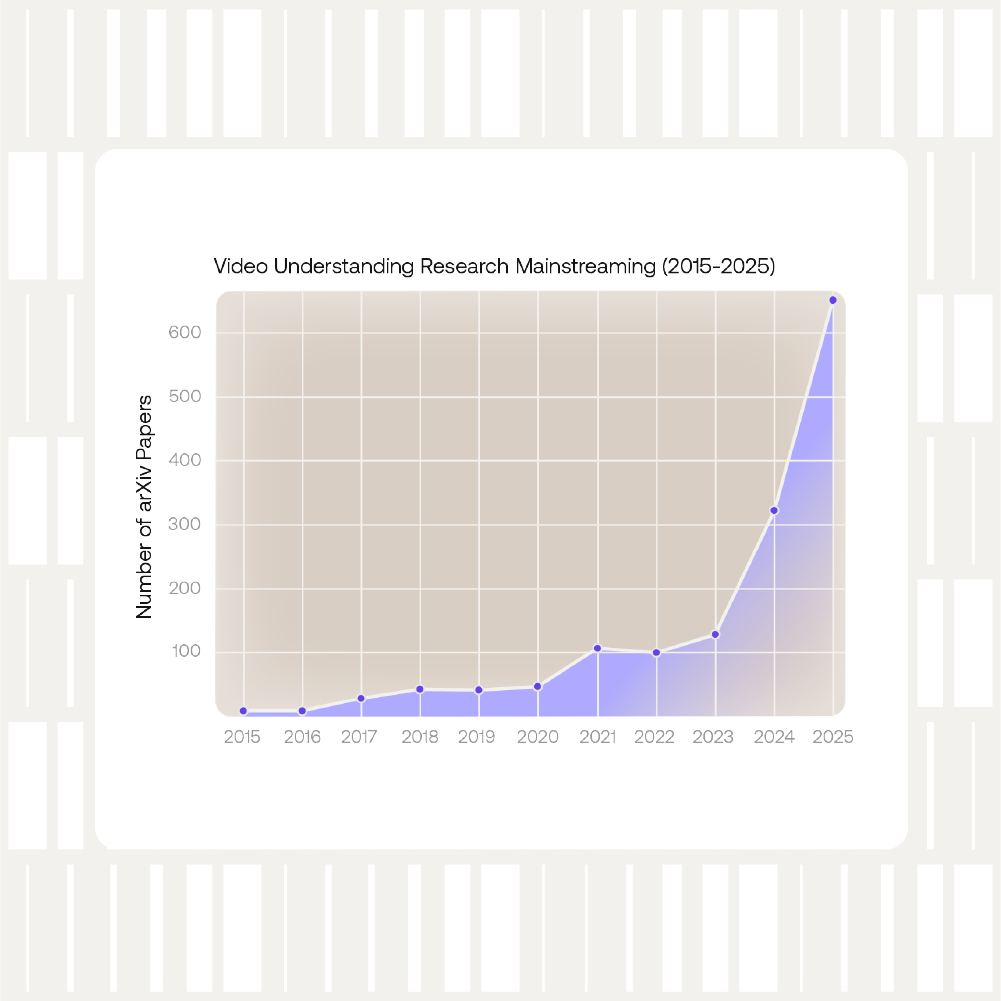

Every minute, a staggering 500+ hours of video content is uploaded to YouTube alone. When we consider all social media platforms, broadcast content, and other video-sharing sites, the sheer volume of video data becomes overwhelming. A significant portion of these videos are long-form content. Despite the abundance of video data, most video understanding technologies have traditionally focused on short-form content, primarily due to computational limitations and the need for temporal coherence.

One critical pre-processing step in long-form video management is the segmentation of videos into distinct chapters. These chapters consist of semantically coherent segments, which enable:

- A better user experience, as users can quickly navigate through the video

- Increased efficiency of search engines

- More precise analysis, allowing algorithms to target relevant segments of the video.

In this article, we will use open source models to build a multimodal pipeline for automatic chaptering in long-form videos. The full source code used in this article is available at this Google Colab link. The code can be run with or without a GPU.

Core Idea

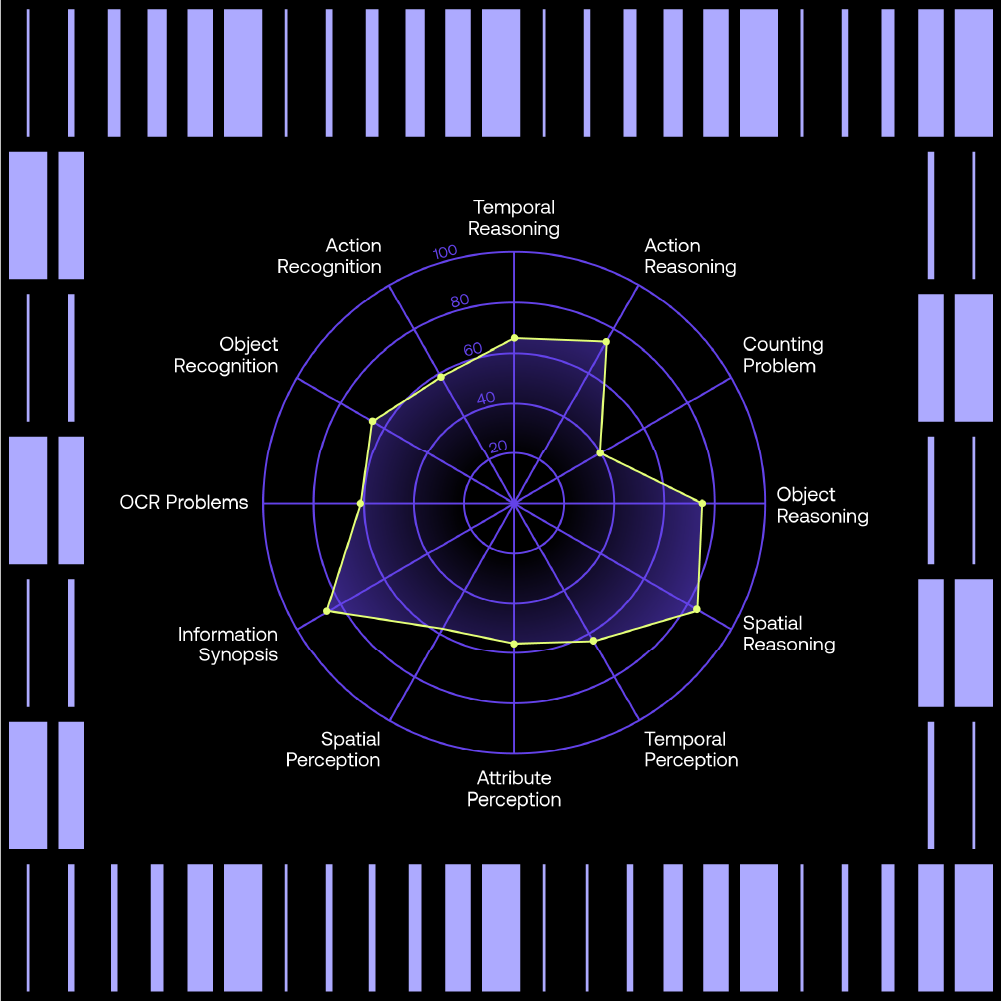

The core idea of this AI system is to compute and compare key shot descriptors that make up a video. Pre-trained models on large-scale datasets possess powerful zero shot capabilities, enabling an unsupervised video chaptering system. Embeddings from these models are compared using various distance functions enabling the detection of chapter boundaries. To ensure a robust chaptering system, several modalities are used such as shot frames, transcript, raw audio and speaker identification.

Shot Detection

Many video understanding models, when trained on short videos, typically rely on processing one frame per second. This results in a small number of frames for short videos. For instance, Video-LLAVA only extracts 8 frames uniformly. However, applying this approach to long-form videos can be computationally prohibitive. A more efficient preprocessing strategy involves detecting shots within the video. A shot is a group of continuous frames captured by a single camera without interruption, meaning that frames within a shot often have the same semantic information. Therefore, selecting one or more representative frames from each shot can significantly reduce the computational load while still capturing essential information. To detect shots, the PySceneDetect package is used. While this package offers several customizable parameters, a good starting point is to use the ContentDetector class.

from scenedetect import SceneManager, open_video, ContentDetector

video_path = './royal_wedding.mp4'

video = open_video(video_path)

scene_manager = SceneManager()

scene_manager.add_detector(ContentDetector(threshold=27, min_scene_len=48))

Modality Extraction

Image Modality

For each shot, the central frame is selected. A CLIP VIT-L/14 is used to extract embeddings from each frame. This model has a vision and a text encoder which were trained to maximize the similarity of image and text pairs via a contrastive loss. The vision encoder is only needed so a method is defined to extract embeddings by using only the vision part of the CLIP model.

from PIL import Image

from transformers import CLIPProcessor, CLIPModel

def get_img_embeddings(model, frame_path):

image = Image.open(frame_path).convert('RGB')

inputs = processor(images=image, return_tensors="pt", padding=True).to(DEVICE)

pixel_values = inputs['pixel_values']

return model.vision_model(pixel_values, return_dict=False)[1]

model = CLIPModel.from_pretrained("openai/clip-vit-large-patch14").to(DEVICE)

processor = CLIPProcessor.from_pretrained("openai/clip-vit-large-patch14")

Text Modality & Speaker Identification

A combination of diarization and speech-to-text models are used to get the transcript of the video. You can use the amazing open source WhisperX repository, which packages a whole pipeline for speech-to-text including diarization and word alignment. The transcript consists of segments of speech and an ID for each speaker in a JSON format. A code is provided to read it and to extract segments of speech.

Transcript segments are aligned with detected shots by computing the intersection over the union of segments and shot timestamps. To avoid creating small chunks of words with insufficient context, the entire transcript segment is assigned to a shot rather than being shot-based. To obtain text embeddings, the General Text Embeddings (GTE-large) model is used. This lightweight model is trained on a large-scale corpus of relevant text pairs. This knowledge aids in comparing audio segments from different shots and helps detect chapters.

transcript = read_transcript('./royal_wedding_transcript.json')

speaker_by_shot, sentences_by_shot = get_speaker_sentences_by_shot(shots, transcript)

nlp_model = sentence_transformers.SentenceTransformer('thenlper/gte-large').to(DEVICE)

Raw Audio Features

Audio speech represents a significant portion of the audio information in a video. However, not every video necessarily contains audio speech. Features from the raw audio signal can aid in creating chapters for videos with little to no audio speech data. Mel-Frequency Cepstral Coefficients (MFCC) features are extracted using the Librosa library. These audio features are aligned with shot timestamps by averaging the different MFCC values for a given shot, providing an audio representation of each shot.

def align_features_and_shot(features, step, shot):

tc_in, tc_out = shot.start_second, shot.end_second

idx_in, idx_out = int(tc_in // step), int(tc_out // step)

shot_features = features[:, idx_in:idx_out + 1]

mean_shot_features = np.mean(shot_features, axis=1) # mean shot intensities

mean_shot_features = np.nan_to_num(mean_shot_features, nan=1e-6) # Some nan values can appear

return mean_shot_features

def get_audio_features(audio_path):

y, sr = librosa.load(audio_path)

hop_length = 512

mfcc = librosa.feature.mfcc(y=y, sr=sr, hop_length=hop_length)

step = hop_length / sr # in seconds

return mfcc, step

Compute Distance

Now that features have been computed for each shot, we can calculate the distances between consecutive shots. For the image and text embeddings, cosine distance is used, as it is an effective similarity function for trained embeddings. Euclidean distance is computed for the MFCC features, as they represent the mean of intensities. For the speaker information, a simple rule is applied: the distance is 0 if the speakers are the same and 1 if the speakers are different.

def compute_distance(shots, sentences, speakers, audio_features):

"""

Computes distance between two shots

Args:

shots (Tuple[Shot]): tuple of two shots to compare

sentences (Tuple[List[str]]): tuple of list of shot's sentences

audio_features (np.Array): MFCC features array of the full audio

Returns:

float: total distance between input shots

"""

# Get Features

shot_a, shot_b = shots

sentences_a, sentences_b = sentences

img_embs_a, text_embs_a, audio_mfcc_a = get_features(shot_a, sentences_a, audio_features)

img_embs_b, text_embs_b, audio_mfcc_b = get_features(shot_b, sentences_b, audio_features)

# Compute Distances

img_dist = cosine(img_embs_a.cpu().detach().numpy()[0], img_embs_b.cpu().detach().numpy()[0])

text_dist = cosine(text_embs_a, text_embs_b)

speaker_dist = 1 if speakers[0] != speakers[1] else 0

audio_dist = euclidean(audio_mfcc_a / np.linalg.norm(audio_mfcc_a),

audio_mfcc_b / np.linalg.norm(audio_mfcc_b))

tot = (img_dist + text_dist + 0.5 * speaker_dist + 0.3 * audio_dist) / 2.8

return totBased on the distances, chapters can now be created. If the distance between two consecutive shots is above a set threshold, then a chapter should begin at this shot timestamp.

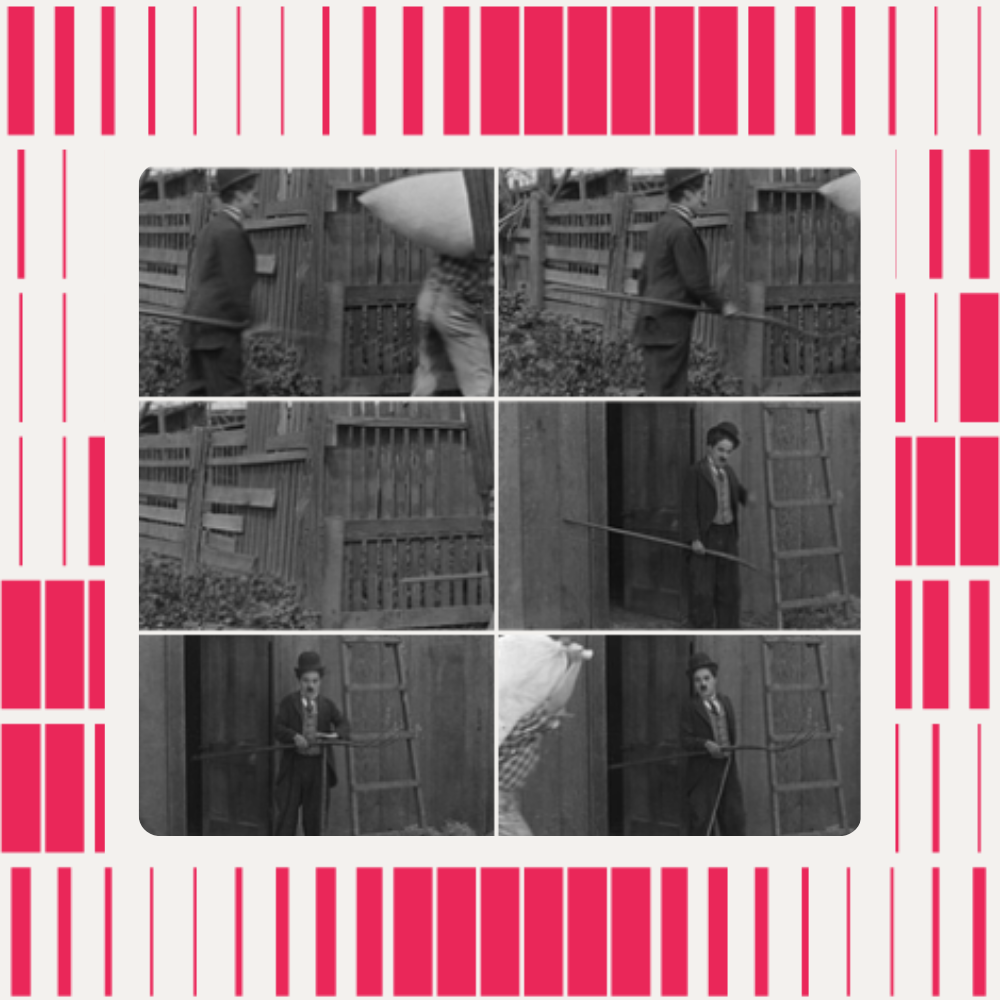

The image below shows the 33 central frames of the shots in the video. Rectangles of the same color correspond to chapters of consecutive shots. Analyzing the chapter results, we can clearly observe a logical grouping. For example, the first six shots are grouped together as they all depict Windsor Castle. The second chapter is an interview with a British royal expert. The next six shots feature the Myna association, which was one of the organizations British people were asked to donate to. However, the chapterization is not perfect, as one could argue that the last two chapters could be grouped together.

Conclusion

To conclude, we have presented a strong starting point for detecting chapters in a video based on the distance computation of multimodal features from pretrained models. This shot-based approach could be improved by incorporating preprocessing and postprocessing steps. For example, enforcing a minimum chapter duration could prevent the creation of very short chapters, addressing the issue seen in the last chapter of the above example.

This non-supervised approach already shows promising results. At Moments Lab, we have also transformed this approach into a supervised model by adding a trainable late fusion block. More details can be found in the paper we published, where we demonstrate excellent results (82% of precision at IOU of 90%) in identifying stories in TV newscasts. Additionally, our system allows for adjusting segmentation parameters based on the type of content, such as grayscale content, rushes with minimal speech, or press conferences with extensive speech. This ensures that our technology adapts to different content types, providing a more robust and versatile solution.

Finally, each chapter should have a title, which can be generated using a large language model (LLM) based on the transcript and visual descriptions from the chapter. A future blog post will cover this aspect in more detail.