MXT-2: A New Leap in Hierarchical Video Understanding

Our recent benchmark evaluation of the MXT-2 model highlights its strengths in general video understanding, automatic video chaptering, video summarization, and speaker diarization.

Video understanding continues to evolve rapidly, with state-of-the-art models now reaching impressive capabilities. Today, we're excited to share insights from our recent benchmark evaluation of the MXT-2 model, highlighting its strengths, especially in general video understanding, automatic video chaptering precision, video summarization quality and speaker diarization.

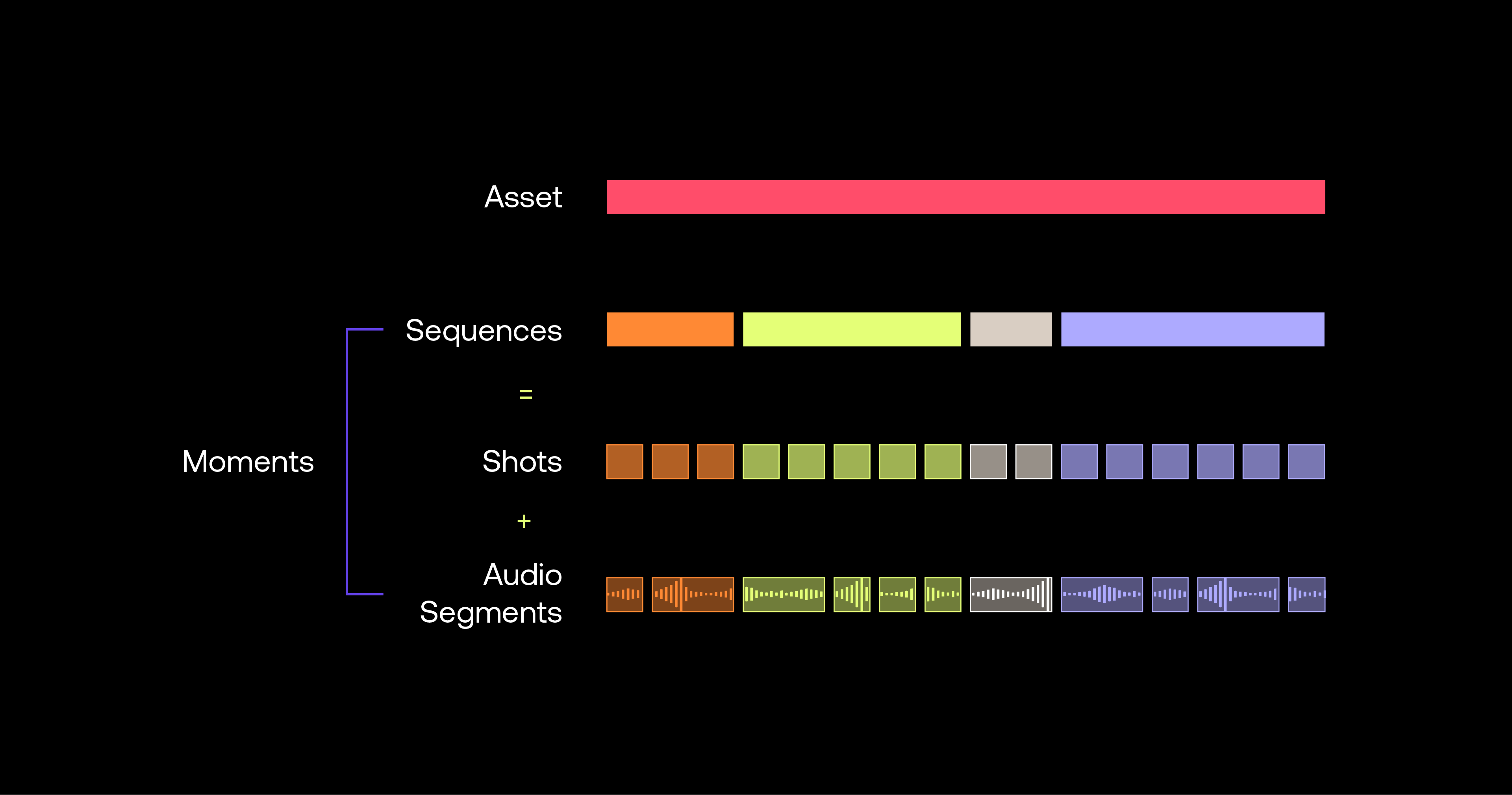

MXT-2 is Moments Lab’s proprietary technology that understands, indexes and summarizes video content with a structured hierarchical approach that splits the content into smaller chunks. More details can be found in the MXT-1.5 blog post.

MXT-2: Enhancing General Video Understanding

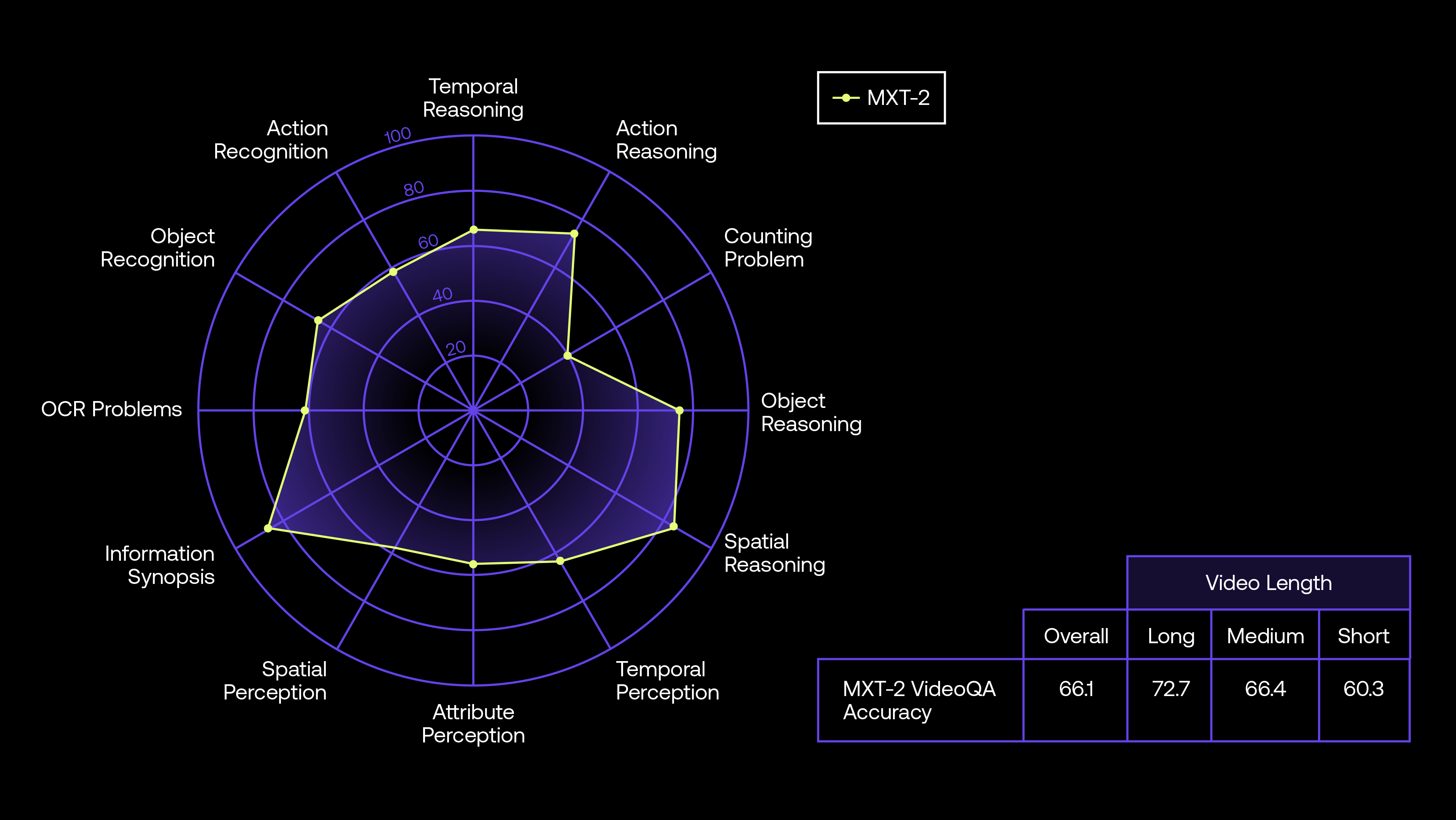

To evaluate MXT-2’s general video understanding capabilities, we tested it on VideoMME. It is an important benchmark for evaluating automated systems on the question-answering task. It particularly represents the ability for a system to truly understand a video. This benchmark is composed of 900 videos. For each video, three questions are asked on the video content, evaluating the model’s capabilities on various tasks such as: temporal reasoning, object recognition, and temporal perception.

In our recent evaluation using the VideoMME benchmark, MXT-2 demonstrated strong video indexing capabilities. The model was tested for its capacity to answer questions from VideoMME by using MXT generated metadata, including transcript and visual descriptions. MXT-2 achieved an overall accuracy of 66.1%, with notable performance on long-form video understanding (72.7%). This positions MXT-2 competitively among industry-leading models such as GPT-4o and Gemini 1.5, particularly highlighting its robustness in handling extensive video data.

Precision in Video Chaptering

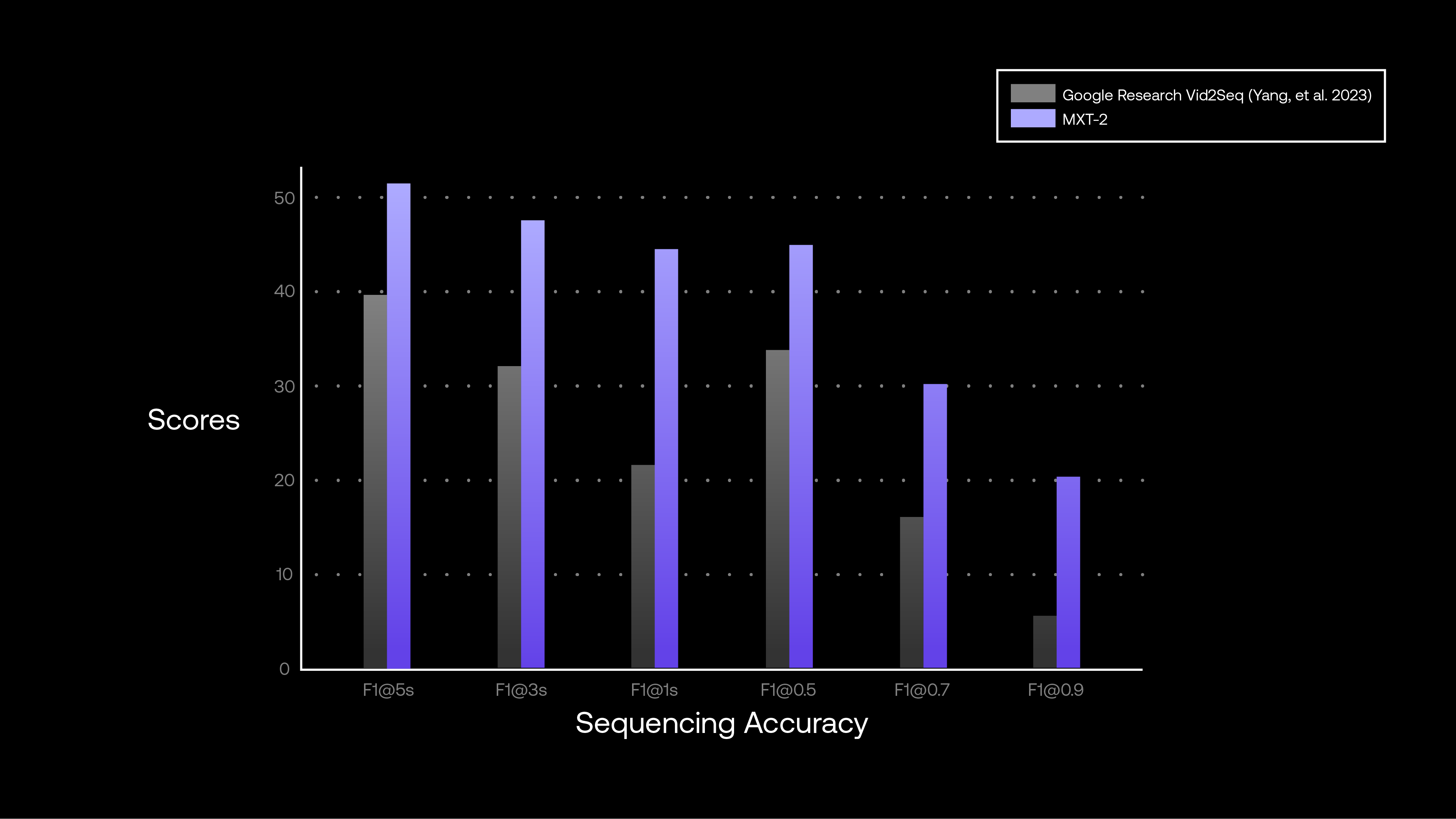

Another significant strength of MXT-2 lies in its automatic video chaptering capabilities. Evaluated on the FineVideo dataset, consisting of over 190 hours of content, MXT-2's generated sequences showed impressive precision. Our findings indicate that sequence locations generated by MXT-2 are typically 15% more precise than those of other leading models like Vid2Seq.

Located sequences are evaluated using precision (P@Ks, P@K), recall (R@Ks, R@K), and F1 scores (F1@Ks, F1@K). Metrics consider distance to ground-truth chapter start (1, 3, 5 seconds thresholds) and Intersection over Union (IoU) of predicted and ground-truth timestamps (0.5, 0.7, 0.9 thresholds).

The F1 IoU at different thresholds confirmed MXT-2's effectiveness. Specifically, MXT-2 achieved an F1 IoU at five seconds accuracy of 51.26%, significantly surpassing Vid2Seq’s 39.57%.

More details about this evaluation can be found in this technical paper presented at the NAB BEIT Conference 2025.

Advances in Summarization

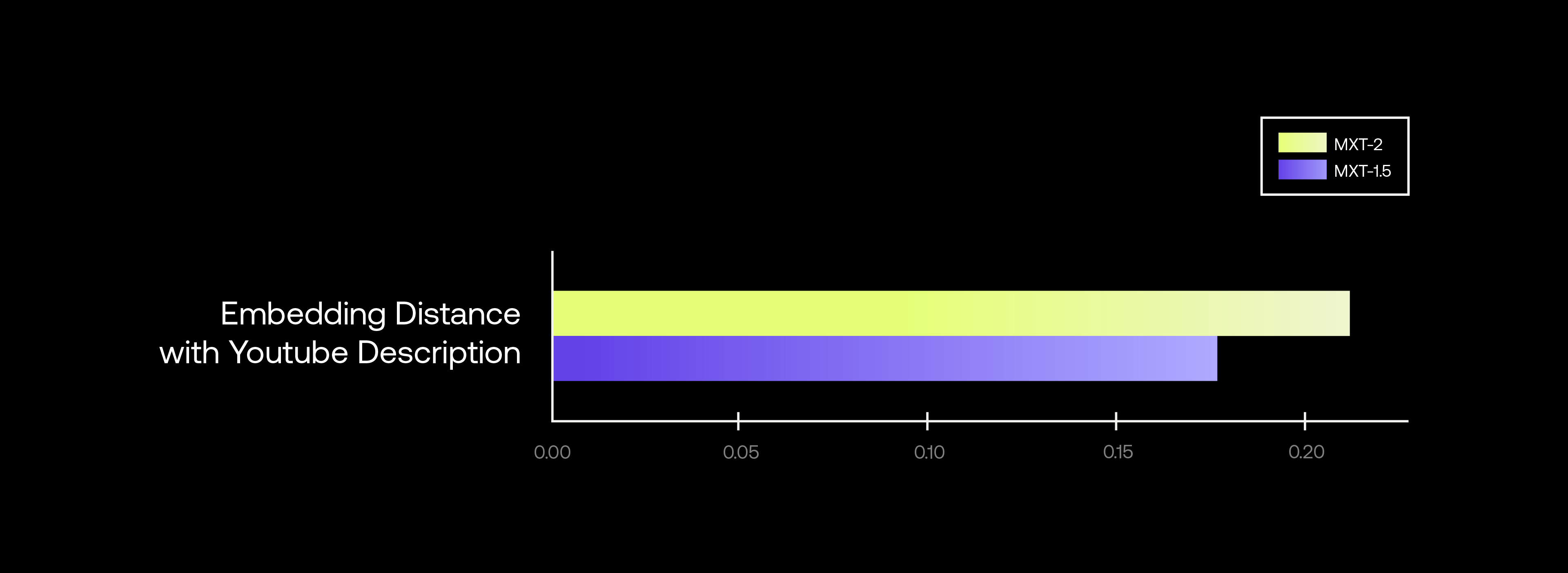

Thanks to its powerful video chaptering, MXT-2 also shines in video summarization. We benchmarked it versus its predecessor, MXT-1.5.

Our evaluation used two approaches: human annotators preference and similarity with a ground truth obtained from YouTube descriptions. Impressively, MXT-2 summaries were preferred over previous-generation MXT-1.5 summaries in 87.5% of cases. Additionally, textual vector embedding comparisons showed MXT-2 summaries were consistently 16% closer to the ground truth, demonstrating a clear improvement in capturing video essence concisely and accurately.

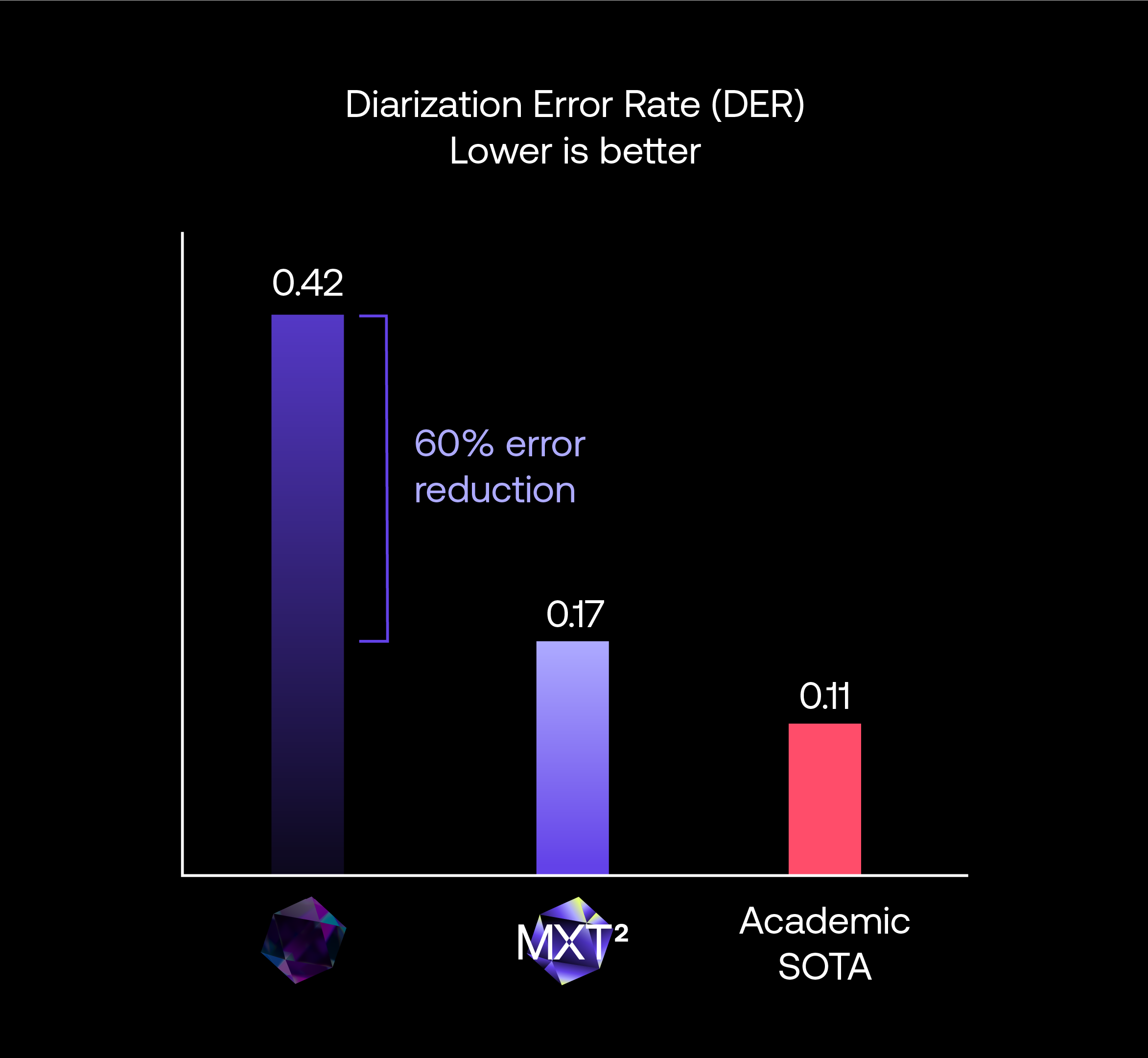

Speaker Diarization

Speaker diarization is the task of determining who spoke and when in an audio or video recording. By identifying the speaker turns, one can better understand a conversation and derive key elements about how people interact with a video.

Speaker diarization plays a crucial role in our hierarchical approach to video understanding.

MXT has used speaker diarization since its first version, but the system has been greatly improved for MXT-2, achieving a 60% Diarization Error Rate improvement compared to MXT-1 on the VoxConverse benchmark. This places us very close to the academic state-of-the-art on this challenging dataset.

Conclusion

The MXT-2 model represents a significant advancement in hierarchical video understanding, demonstrating enhanced capabilities across several key areas. Its strong performance in indexing relevance, particularly in long-form video understanding, positions it competitively against leading models.

The precision achieved in automatic video chaptering, as evidenced by the FineVideo dataset evaluation, showcases its ability to accurately segment video content. Furthermore, MXT-2's improvements in video summarization, with human annotator preference and closer alignment to ground truth, indicate a superior ability to extract and present video essence. Finally, the substantial Diarization Error Rate improvement in speaker diarization underscores MXT-2's refined ability to identify and differentiate speakers, a crucial component of comprehensive video understanding. These advancements collectively highlight MXT-2 as a robust and effective solution for complex video analysis tasks.

While the benchmarks used for evaluating MXT-2 demonstrate its capabilities, it's important to acknowledge certain limitations. For instance, the FineVideo dataset, used for evaluating video chaptering, was created by an LLM (Gemini). This can introduce potential biases or limitations in the dataset's representativeness or the nuances it captures, as LLM-generated data might not always perfectly reflect the full complexity and variability of real-world video content.

Also, none of these datasets contain very long videos, such as 3+ hour TV shows. We know that MXT’s hierarchical approach to video understanding makes it particularly efficient to process long videos, but this is not reflected in this benchmark. Understanding these characteristics of the evaluation datasets is crucial for a comprehensive interpretation of the model's performance.

As we continue to push the boundaries of video understanding for real-world use cases, we now focus on improving the technology through relevance understanding. Not everything has the same weight in a video. Choosing the right modality, frame, or clip to answer a specific question is one way to achieve future breakthroughs.

.png)