The Impact of Frame Sampling on Small Vision Language Models

In the rapidly evolving world of multimodal AI, Vision-Language Models (VLMs) are at the forefront, seamlessly integrating visual and textual information to tackle tasks like visual question answering (VQA). While large VLMs have garnered significant attention, our focus is increasingly shifting towards Small Vision-Language Models (SVLMs) due to their efficiency and scalability. However, comparing these compact models on video tasks has been a complex challenge, primarily due to inconsistencies in how video frames are sampled.

Our research highlights a "frame-sampling bias" in current video benchmarks, where different frame selection strategies obscure the true performance of SVLMs. This work proposes the first frame-accurate benchmark for state-of-the-art SVLMs, evaluated under controlled frame-sampling strategies, confirming the suspected bias and revealing fascinating insights into model behavior.

Why sample frames?

Videos consist of many frames (typically at least 25–30 per second). An hour of footage contains hundreds of thousands of frames, and processing all of them is computationally expensive. Moreover, consecutive frames often carry redundant information. Frame sampling selects the most informative or representative frames, which reduces computation while preserving task-relevant content and performance.

Why does frame sampling matter for VLMs?

Imagine trying to understand a movie by only watching a few random scenes. You might miss crucial plot points or misunderstand the overall narrative. Similarly, for VLMs, the way video frames are selected significantly impacts their ability to process and reason about video content.

We categorize frame sampling into two main protocols:

Standard Sampling

- Uniform-FPS Sampling: Frames are extracted at a fixed rate (e.g., 2 frames per second), with an upper cap for computational efficiency and a lower bound for very short videos.

- Single-Frame Sampling: Only one frame (either the center or the first) is selected, offering insights into a model's performance without temporal cues.

Adaptive Sampling

Adaptive methods aim to intelligently select the most informative frames, rather than relying on a fixed rate. Our study explores two key adaptive algorithms:

- MaxInfo: This training-free method dynamically samples informative frames using the Rectangular Maximum Volume algorithm. It involves uniformly sampling a large set of initial frames, calculating their visual token embeddings, and then applying Max Vol to extract the most informative subset.

- CSTA (CNN-based SpatioTemporal Attention): Adapted from video summarization techniques, CSTA uses a 2D-CNN to capture spatial and temporal relationships, assigning an importance score to each frame and retaining the top-scoring frames.

The Benchmark and Key Findings

We evaluated four prominent SVLMs (Qwen2.5-VL-3B, Ovis2-2B, InternVL3-2B, and SmolVLM2-2.2B) on two large-scale video understanding benchmarks: VideoMME and MVBench. Our evaluation considered uniform-FPS, single-frame, MaxInfo, and CSTA sampling strategies, all under a unified token budget of N_max=96 frames to ensure fair comparisons.

Here are some of the insights we found:

- VideoMME favors uniform sampling: On the VideoMME benchmark, uniform-FPS sampling consistently yielded the best performance across all SVLMs. This suggests that VideoMME, with its longer, more diverse videos, benefits from a steady temporal coverage that captures fine-grained changes.

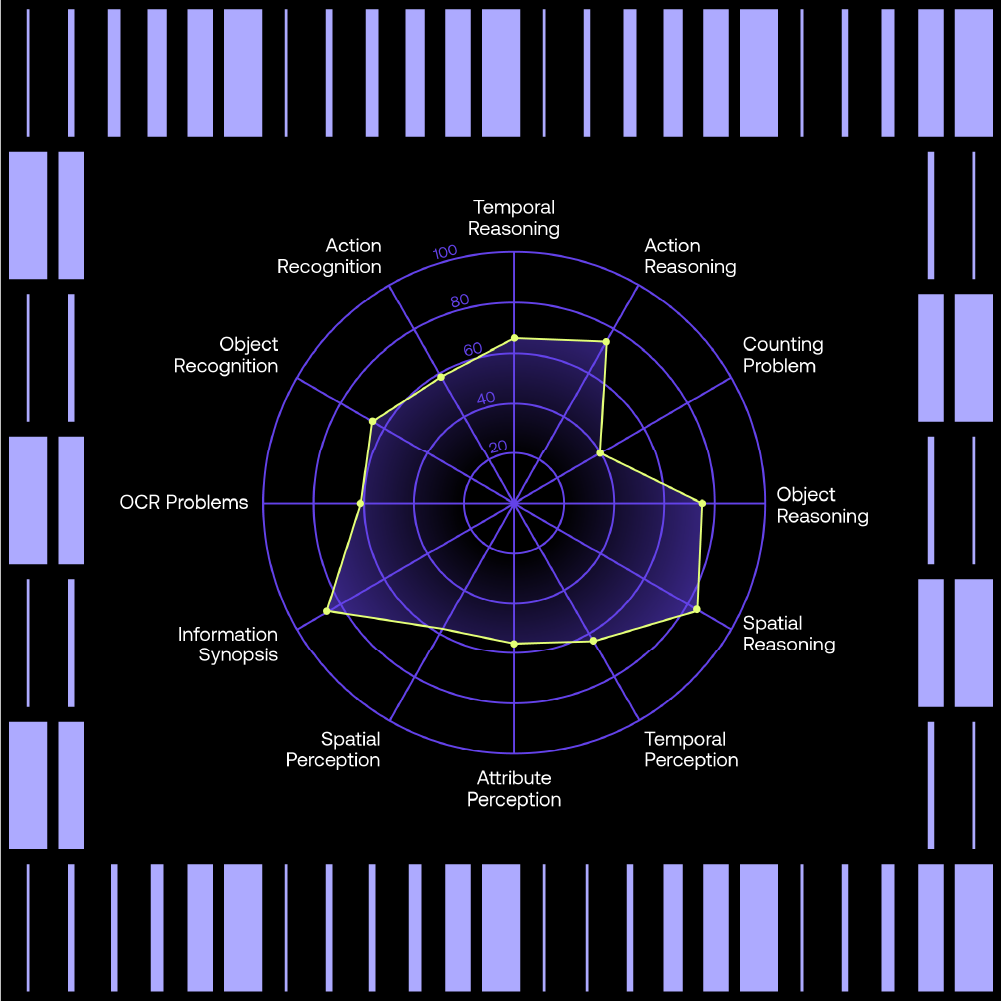

- MVBench is model-specific: In contrast, the optimal strategy for MVBench varied significantly depending on the model. For instance, Qwen2.5 performed best with MaxInfo, SmolVLM2 with a single center frame, InternVL3 with CSTA, and Ovis2 preferred uniform-FPS. This highlights MVBench's diverse tasks, where some are solvable with a few salient frames while others benefit from adaptive selection.

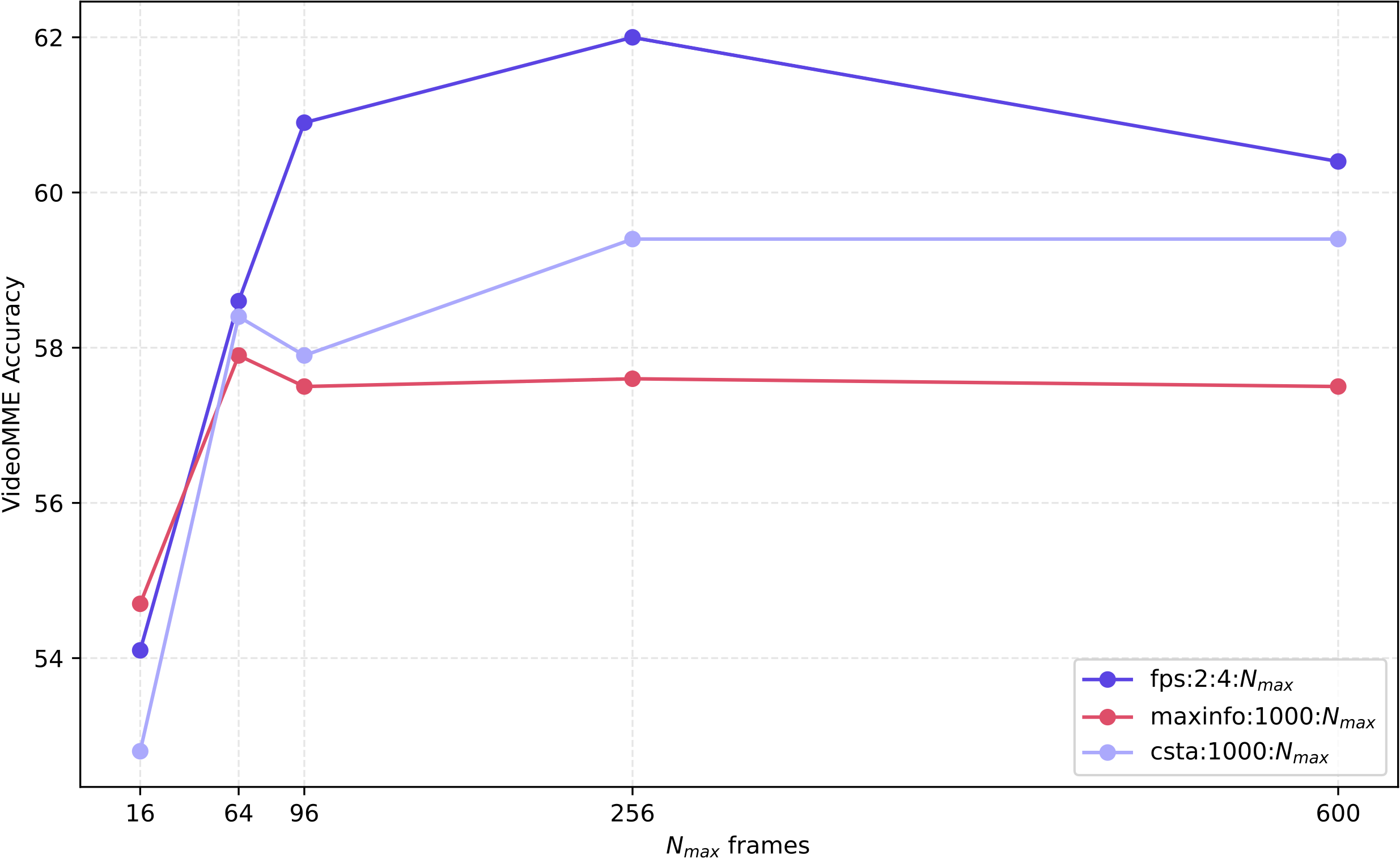

- Ablation study on frame counts: An ablation study with Qwen2.5 on VideoMME demonstrated that uniform-FPS sampling benefited most from larger frame counts, with accuracy peaking around 256 frames. This indicates that while more temporal coverage is generally good, excessive frames can introduce redundancy or noise.

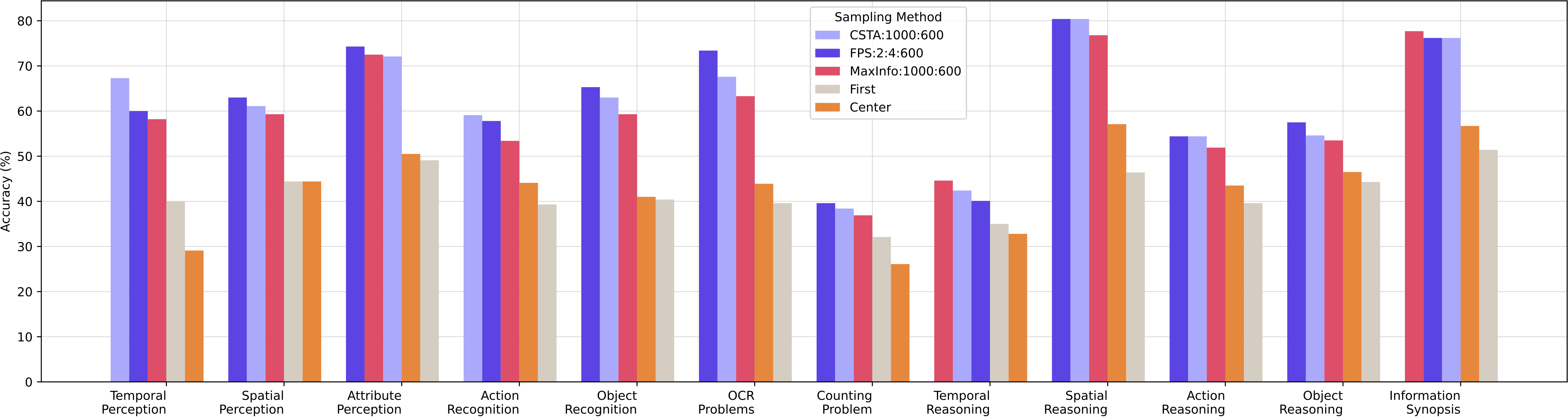

- Adaptive strategies for temporal tasks: While uniform-FPS generally excelled on VideoMME, adaptive strategies like CSTA and MaxInfo showed superiority in specific temporal tasks, such as temporal perception, temporal reasoning, and information synopsis. This is probably because these tasks rely on capturing fine-grained motion dynamics, which adaptive methods are better suited to preserve.

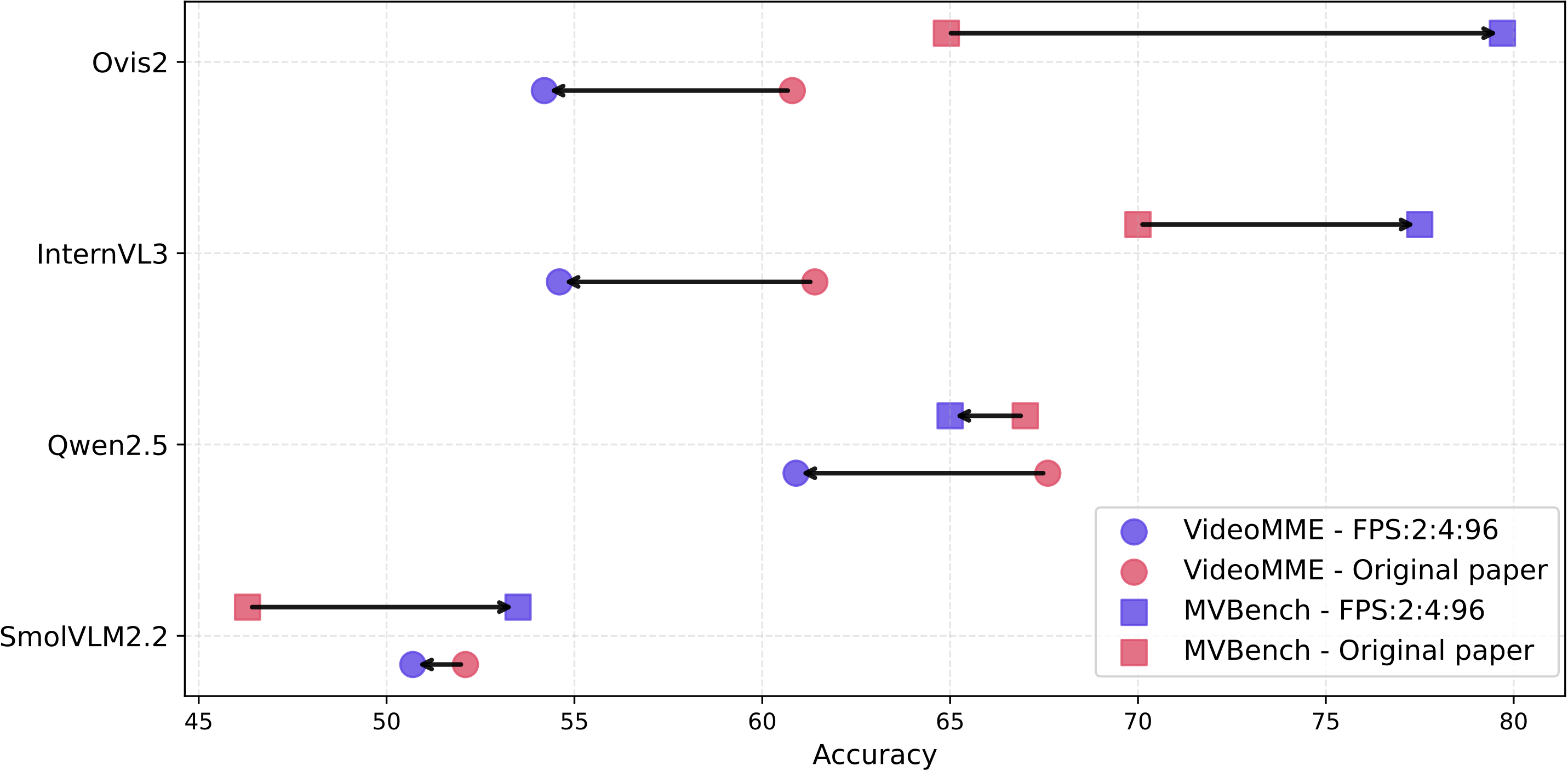

- Reported results mismatch: Finally, our research also revealed substantial discrepancies between previously reported scores and our controlled evaluations, underscoring the importance of standardized sampling protocols for truly reproducible and fair comparisons.

Looking Ahead

This benchmark provides the community with a reproducible and unbiased protocol for evaluating video VLMs. Our findings emphasize the critical need for standardized frame-sampling strategies tailored to each benchmarking dataset in future research.

For future work, we suggest exploring more refined adaptive strategies that can deliver consistent gains across tasks and datasets. Another promising avenue is to integrate frame selection directly into VLM training, enabling models to jointly optimize which frames to sample for a target task.

By open-sourcing our benchmarking code, we invite the community to further explore and build upon our findings, paving the way for more accurate and robust evaluations of video VLMs.

.png)