MXT-1.5 - A Hierarchical Approach to Video Understanding

MXT 1.5's unique approach to video understanding demonstrates strong performances across all types of videos, surpassing GPT-4o and Gemini 1.5 Pro on a popular video benchmark.

MXT approach

MXT stands for Media Indexing Technology and it’s a cutting-edge combination of AI models developed by Moments Lab. Together, they enable the solving of complex video understanding problems.

In contrast to other popular video understanding solutions, MXT is not a single model. Instead, it leverages the performances of several expert models for complex tasks such as speaker diarization, landmark identification, or image captioning. Each of these models is trained or prompted to generate new metadata to better describe every moment of a video.

MXT’s main goal is to generate a 3-level hierarchical indexing of any video content.

- At a shot level, MXT detects a lot of raw information such as spoken word, persons and places, the type of shot, or camera motion.

- These shots are then grouped to create chapters or sequences that share some common attributes. This step is discussed in a recently published preprint and is reproducible by using our open-source notebook.

- Finally, MXT generates an overall summary of the whole video file.

The orchestration of these models together can be challenging, both in terms of infrastructure and model choice. However, we note that a number of these results can be reproduced by the open-source community by following open standards such as MPAI Television Media Analysis and using open weights models.

Once the metadata are extracted with the corresponding timecodes and stored in natural language, they can then be provided to an LLM (GPT-4o in our case) to solve complex video question-answering (VideoQA) tasks over the entire video.

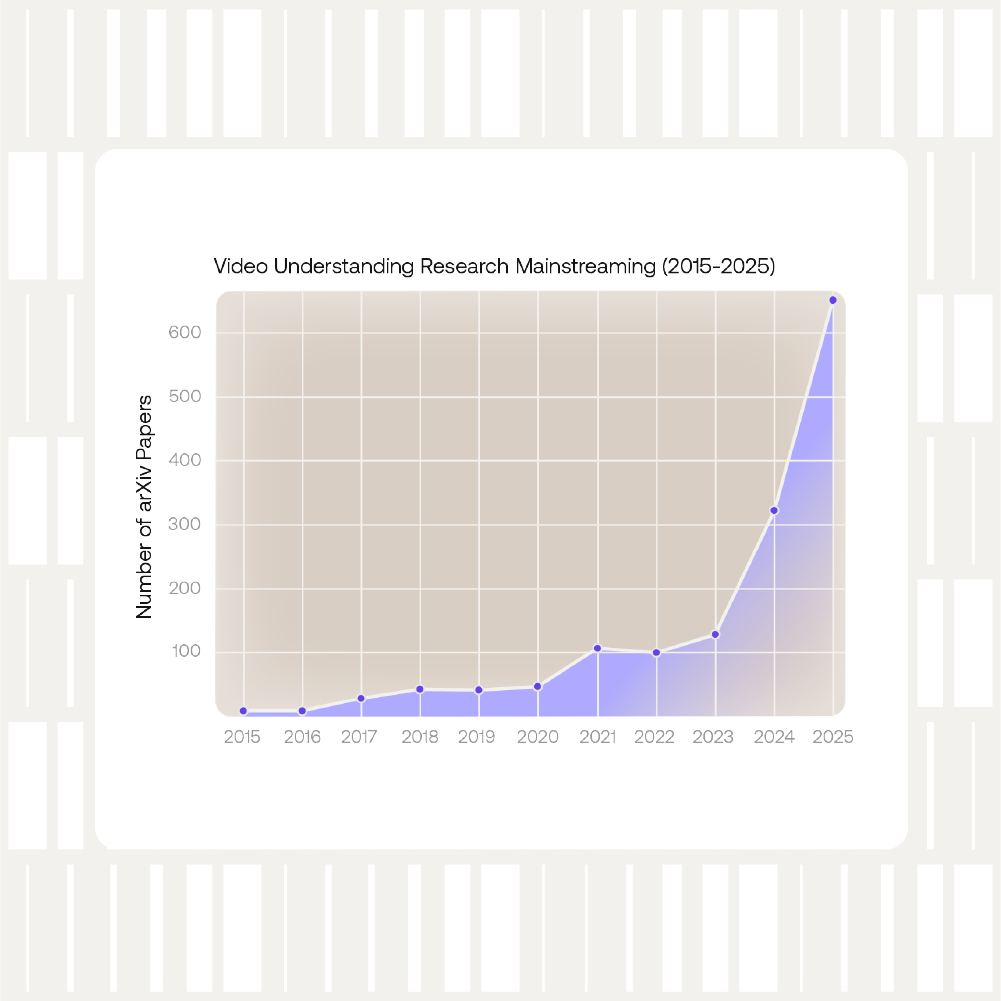

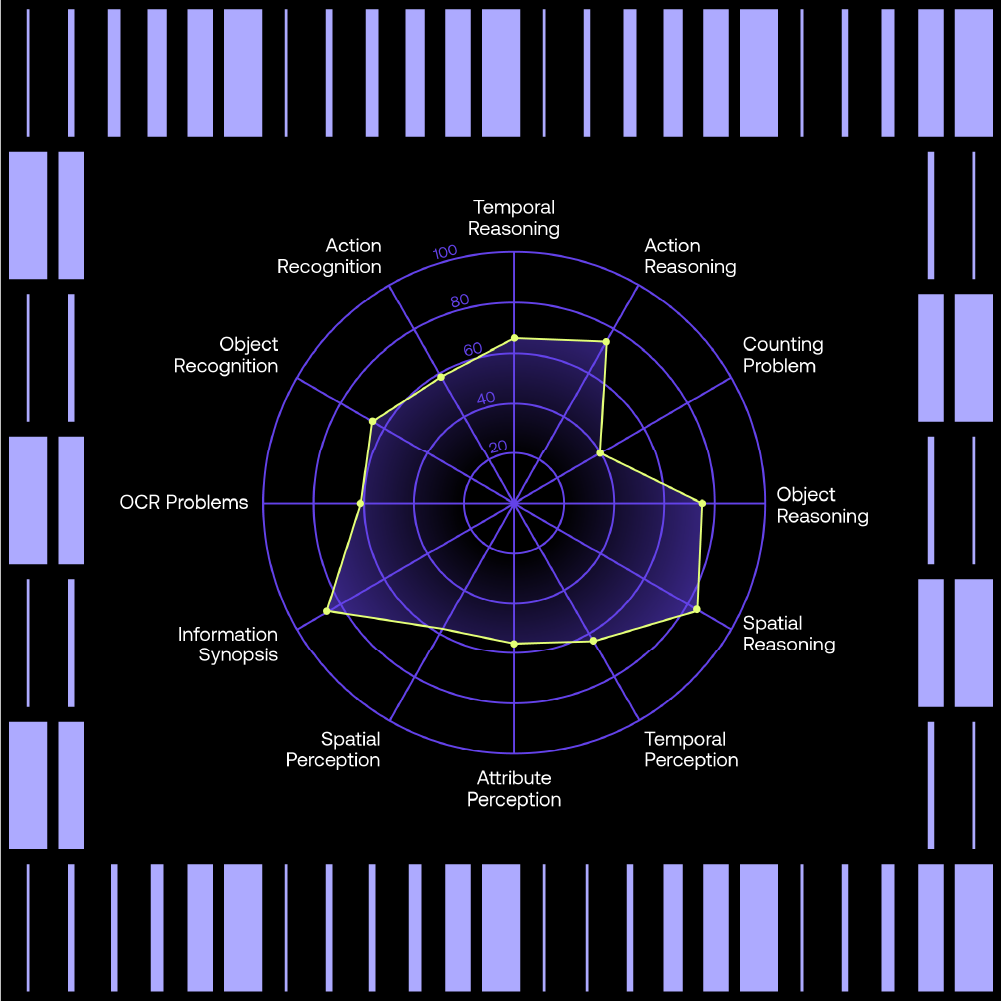

Results on VideoMME Benchmark

To demonstrate the capabilities of our latest MXT-1.5 technology, we evaluated its performance on the VideoMME benchmark. This dataset covers a wide range of video domains and categories, and each video is accompanied by several questions that require different capabilities, such as object detection or temporal reasoning.

MXT-1.5 shows very good performances across the entirety of the benchmark, even beating state-of-the-art models by a large margin on long videos and by a small margin on the overall dataset.

More detailed results also show that the MXT approach is significantly better for Sports or Television video understanding.

Key takeaways

This study of the MXT indexing approach for video understanding proves several points.

First of all, our verticalized approach to video metadata generation generalizes across various video categories and competes with high-end models. We demonstrated that using a set of generated metadata and NLP still outperforms multimodal and visual encoders for VQA on long videos. Probably because plain text English is still a great information compressor and can carry most of the high-level aspects that help answer contextual questions.

We also note that using high-level results of a mixture of experts (most of which are non-generative systems) as input features of a VideoQA pipeline can greatly improve its accuracy. MXT1.5 results are more than 20 points higher than GPT-4o on long videos even if the same LLM is used to generate the final answer in the proposed workflow.

Finally, we want to highlight that the 3-level hierarchical indexing proposed by MXT is also making new workflows possible at scale, especially retrieval augmented generation (RAG) over large video libraries or efficient video chaptering.