Enhancing Video Content Creation with Retrieval Augmented Generation

Our research paper presented during the 16th International Conference on Human System Interaction (HSI 2024) in Paris offers a novel solution to the problem of repurposing vast video libraries to craft new narratives.

In recent years, the landscape of video content creation has dramatically evolved, driven by technological advancements and the ever-increasing demand for diverse and engaging multimedia content. However, video content creators, journalists, and media managers still face significant challenges in repurposing vast video libraries to craft new narratives. In this blog post, we explore an approach presented in our recent research paper "Towards Retrieval Augmented Generation over Large Video Libraries" which offers a novel solution to this problem.

Introduction to Video Library Question Answering (VLQA)

Repurposing video content involves more than just finding relevant clips. It requires an understanding of storytelling, user intent, and often a deep knowledge of past events. Traditional methods rely heavily on manual searches or sophisticated automated search engines, which often require a strong user expertise. The introduction of AI-assisted content creation, particularly using large language models (LLMs), has revolutionized text-based content generation. However, applying these advancements to multimedia content, especially video, presents unique challenges.

The Concept of VLQA

Deep learning and videos are already long-time friends as many subtasks such as video-text retrieval, dense video captioning or video chaptering are already well studied. More recently, many works also tackle the Video Question Answering problem. This problem leverages advances in natural language processing and computer vision to understand and interpret video content, facilitating accurate responses to queries.

We propose Video Library Question Answering (VLQA) as a novel task that integrates Video Question Answering with Video-Text retrieval. VLQA extends the traditional VideoQA task by incorporating a library of videos rather than focusing on a single video. This introduces complexities such as the efficient indexing and retrieval of relevant video segments from a large collection based on the questions posed. The objective is to provide precise answers by identifying pertinent video clips across an extensive video library, integrating context from multiple sources, and retrieving associated text descriptions or subtitles when necessary.

To solve this problem, we introduce a video indexing pipeline connected with a Retrieval Augmented Generation system. Our proposed system utilizes LLMs to generate search queries that retrieve relevant video moments indexed by both speech and visual metadata. An answer generation module then integrates user queries with this metadata to produce responses that include specific video timestamps, facilitating seamless content creation.

Architecture of the RAG System

The proposed VLQA system, which serves as a baseline for this new task, comprises three main components: a retriever module, an answer generation module, that together guarantee the use of the generated video metadata.

Here's a closer look at how each component functions:

Retriever Module

The retriever module's primary role is to generate queries for a text-based search engine, such as OpenSearch. Using an LLM, the module creates multiple search queries composed of keywords. These queries retrieve video moments represented as text documents, which are then filtered and passed on to the answer generation module. This process ensures that only the most relevant moments are considered for the final content creation, balancing precision and speed.

Answer Generation Module

The answer generation module gathers the initial user query and the metadata from the retrieved video moments, combining them into a larger prompt for the LLM. The LLM is tasked with generating a final answer that includes references to the video moments in a specific format. This allows for easy replacement with hyperlinks to the video moments hosted online, making the system highly efficient and user-friendly.

Using Generated Metadata for Video Data

Rather than relying on abstract embeddings, our baseline approach uses generated metadata for video data. This involves extracting and processing metadata such as transcriptions and image captions from the video content. By focusing on text-based metadata, we can more easily integrate this information with LLMs and ensure compatibility with various search engines and models.

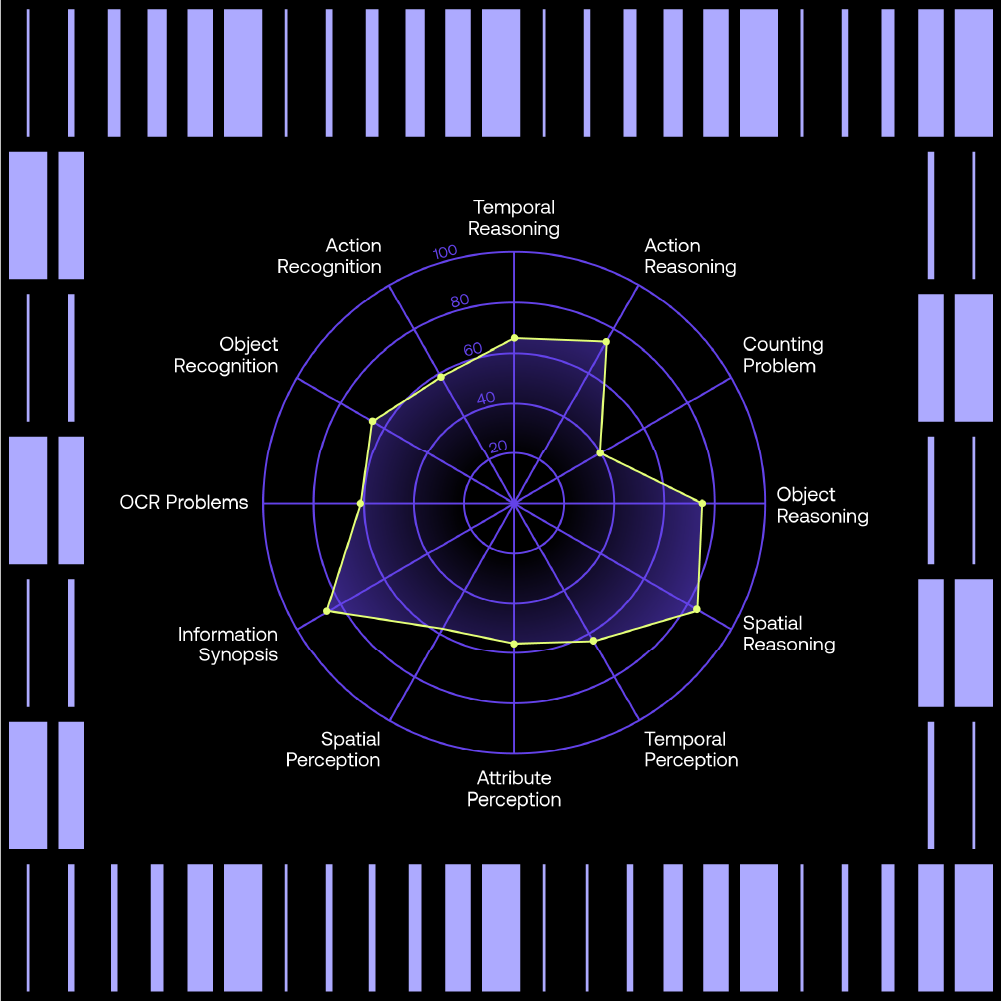

Experimental Setup and Results

To demonstrate the efficacy of the VLQA system, we conducted experiments using a large video library of NASA assets. The library consisted of 9,388 video files, split into 372,181 video moments indexed with metadata extracted using advanced tools like pyannote and whisper for speaker diarization and speech transcription but also BLIP2 for image captioning.

Use Cases and Performance

The system successfully answered conversational text queries by referencing relevant video moments. For example, it could help find clips for a 2-minute documentary about astronauts eating on the ISS or create a trailer script for the Apollo missions. The responses generated were both accurate and contextually appropriate, showcasing the system's potential in real-world applications.

.jpg)

Future Directions

We highlight the need for standardized benchmarks to evaluate VLQA systems effectively. Future work will focus on creating comprehensive datasets and benchmarks to assess performance accurately. Additionally, incorporating a multimodal reranker module could further enhance the system's ability to choose the correct video moments, improving overall accuracy and reliability. Its development should also ensure that the user's creativity is preserved when it comes to using this system as a tool for AI-assisted creation.

Conclusion

The VLQA system represents a significant advancement in AI-assisted video content creation, offering a powerful tool for repurposing large video libraries. By integrating RAG with sophisticated metadata indexing and retrieval techniques, this approach opens new possibilities for multimedia content creation, particularly in fields like journalism and documentary filmmaking. As the technology continues to evolve, we can expect even more innovative applications and improved performance, making video content creation more accessible and efficient than ever before.

.png)