Welcome to Moments Lab Research

Our goal is to contribute to the global research effort and push the boundaries of what’s possible with AI video understanding

Moments Lab has been working in the video industry for more than six years, creating strong partnerships with broadcasters, TV networks and sports rights holders. We have witnessed the complexity and the overwhelming diversity of video content that is produced.

Through our technology and others, AI is already used by content producers and creators to accelerate their workflows and take advantage of their entire video library, mostly thanks to video indexing technologies powered by speech and image processing. But the margin for improvement remains huge.

Only a very small portion of the world's video archives are digitized. The vast majority cannot be efficiently analyzed by off-the-shelf AI systems because it is completely out of their training domains. Additionally, current AI models can have strong biases that prevent their use in some countries or in some sensitive sectors. News journalism for instance requires systems and deep learning models that have been trained with fairness in mind and with the ability to generate explainable results.

Understanding and generating content from text and image input has recently been adopted by the general public thanks to ChatGPT and multimodal LLM-powered technologies. This has been made possible only thanks to decades of work, and some major breakthroughs publicly shared and exploited by the global research community (BERT, Transformers, GPT-2, LLaMa, Mistral, and more). In the meantime, even the most recent AI models still struggle to efficiently process videos in the wild. Videos are highly complex objects with very high dimensional variations that can hardly be understood by today’s models.

Yet, videos contain an important part of human knowledge: human history through journalism and documentaries, human humor and emotions through entertainment and sport, human craft knowledge through tutorials, and so much more. We believe the next frontier for building content from human knowledge lies in efficiently understanding videos.

Several works have been published and research entities across the world are tackling this issue of video understanding through various architecture. V-JEPA from FAIR learns by reconstructing masked videos in an abstract latent space whereas the Video Mamba model suite uses State-Space Models (SSMs) to handle video long-range dependencies. Recent advances reported in the Gemini 1.5 paper also show that scaling large transformers’ context windows up to 10M tokens can help to achieve complex understanding and retrieval tasks over long-form videos.

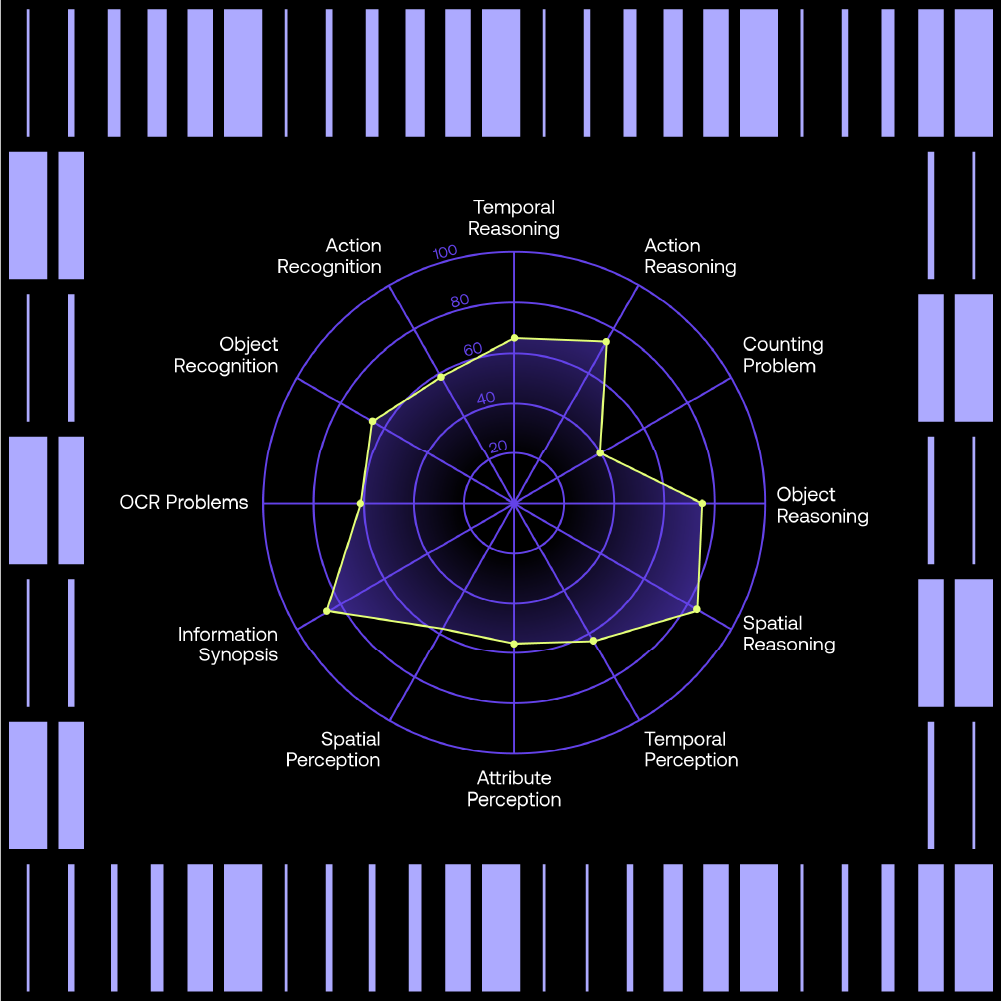

Yet, all these methods either lack explainability, accuracy, diverse representation of the world or the ability to be deployed on compute-efficient hardware.

Our Commitment to Transparent and Open Research

Our goal at Moments Lab is to contribute to this global research effort to push the boundaries of what is possible for video understanding, with a strong emphasis on building models that learn fair representations of the world in its diversity and multiculturalism. Our main areas of focus include long-form video understanding, vision-language models, and information retrieval.

This work is made possible thanks to very strong academic and corporate partners such as Telecom SudParis and TF1 Group. We believe that for our work to benefit our partners and other organizations working with video content, it has to be a transparent and open research effort. This is why we commit to sharing our work with the scientific community together with, as often as possible, the data and models that we create.

Stay tuned for our latest publications!

.png)